Exploratory research based on the PMDD project at the Cognitive Neuroendocrinology (EGG) Lab and the reviewers of Biological Psychiatry

VR compatible Online Experiment Setup to investigate depth effects of face similarity judgements using UXF

(a Unity Experimental Framework)

Studying the immediate effect of brief spans of deep-breathing on attention, memory and mood in graduate students.

Aiming at an automated and simplified polysomnography system using comfortably wearable Brain-Computer-Interface, this project involves 5 stage classification of sleep stages in real-time by leveraging Deep Learning on-smartphone Android application with Tensorflow-Lite.

Brain strokes are among the most common cause of death during accidents in India. Ensuring life-saving treatment requires fast identification of the type of brain stroke - Hemorrhage or Ischemia. This project aims at automating the classification of brain strokes using 3D Convolutional Neural Networks on 500+ CT Brain scans obtained in collaboration with the radiologists from the best hospitals in Karnataka, India.

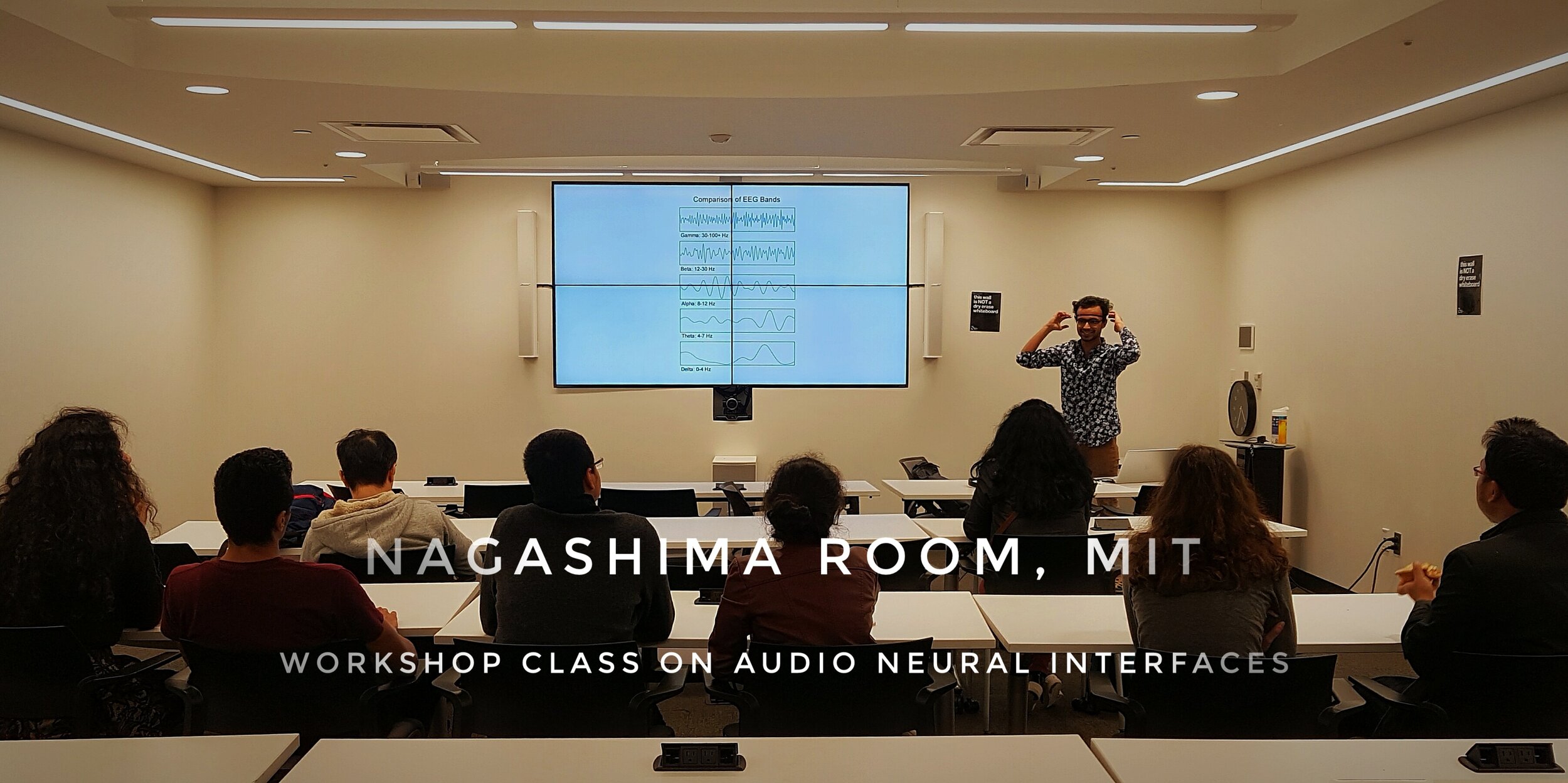

Through this workshop, I present a platform for comprehending the brain activity in the form of audio and its effect as neural feedback back on the brain creating a Brain-Music loop. This Audio Neural Interface provides real-time feedback of “calmness” which is directly proportional to the power of alpha spectral band and “creativity” in terms of alpha-theta ratio as audio output. It uses calculated power spectral sub-bands of EEG from Muse Headband and controllable rhythmic output of frequencies creating an immersive audio experience from simple musical notes to trance music.

This project involved development of automated Yoga-Trainer with the HoloSuit - A full body wearable for real, virtual and augmented worlds. Utilizes Deep Learning for Yoga pose classification in simulated environments through Microsoft Kinect with dynamic Time Warping to compare the captured 3D motions of a yoga posture through HoloSuit. Additional features include finger-based gesture-recognition for motion-capture.

This Best Hack using OpenAI API at Tree Hacks 2021 creates machine generated comics - cartoonized dialogues between critically endangered animals as they are deeply concerned about climate change and global warming. We developed a pipeline using OpenAI's GPT3 to generate a dialogue and cartoon-stylized endangered animals using CartoonGAN, which is a Generative Adverserial Network published in CVPR, 18.

Through computational analysis of dynamic pixels for calculating respiration signal, I tested and optimized the optical flow algorithm as a part of evaluating my supervisor’s project described in the research paper “Real Time Visual Respiration Rate Estimation with Dynamic Scene Adaptation“, using OpenCV Python library. This video based respiration detection technology proposed by Xerox Research and Indian Institute of Science, Bengaluru is used in CocoonCam.

This Best Hardware Hack at HackHarvard 2018 involves real-time recognition of silent vowels. We developed a proof of concept model for capturing unspoken sounds through magnetometers. Applications of this working concept encompasses silent alarms for conveying pain of the patients in medical settings and empowerment of the speechless.

We developed a proof of concept maze solver to arouse scientific curiosity in children who visit the Science Center - Birla Planetarium and Periyar Institute of Science and Technology. Using ultrasound sensors with the Arduino Board, we built a prototype with optimized maze solving capabilities using Left-Hand On Wall algorithm.

As a panelist in the ‘Protecting Our Earth Panel’ hosted by the Guardianship of Ecosystems track in the BioSummit 2019, I spoke about how creating awareness and educating is a crucial step towards conservation of the wild and pristine. Highlighting the importance of sharing and encouraging local efforts in conservation of local ecosystems and biodiversity, I presented a global perspective of how we can involve ourselves in the conservation of our Planet Earth. Further, I spoke about leveraging Social Media not only to create awareness but to act upon local conservation efforts.

Social VR Cognition

A 3D Emotion Database for neuroscientific Studies in VR

Supervisors: Dr. Julie Grezes, Dr. Rocco Mennella

Creation and Validation of a 3D Emotion Database for investigating Approach-Avoidance Decisions in Socio-Emotional Contexts in VR Neuroscientific Experiments